Big Data Technology

Intimidating or not, Big Data is a natural result of our collective obsession with technology. Put simply, it is a reference to mountainous volumes of data that can be amassed by companies or even individuals for that matter. Around a decade ago, it was only the scientific community that actually had what took to wade through reams of data. Today, the need for this kind of diligence is something that is faced by almost every stream.

We do have software that can handle large amounts of data; however, Big Data is now representative of massive data sets which cannot be handled by commonly used ones. This may sound strange, but Big Data now refers to a single dataset size, ranging from few dozen terabytes to petabytes.

A recent report by consultants McKinsey found that in 15 of the U.S. economy’s 17 sectors, companies with more than 1,000 employees store, on average, over 235 terabytes of data, more data than is contained in the U.S. Library of Congress.

Though Big Data may sound futuristic, it does need certain exceptional technologies to efficiently process huge volumes of data in a good span of time. Here are some of the technologies that can be applied to the handling of big data.

Massively Parallel Processing (MPP) – This involves a coordinated processing of a program by multiple processors (200 or more in number). Each of the processors makes use of its own operating system and memory and works on different parts of the program. Each part communicates via messaging interface. An MPP system is also known as “loosely coupled” or “shared nothing” system.

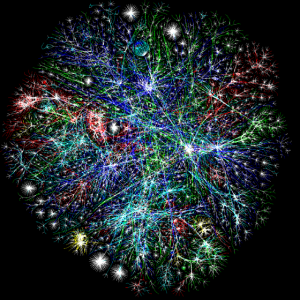

Distributed file system or network file system allows client nodes to access files through a computer network. This way a number of users working on multiple machines will be able to share files and storage resources. The client nodes will not be able to access the block storage but can interact through a network protocol. This enables a restricted access to the file system depending on the access lists or capabilities on both servers and clients which is again dependent on the protocol.

Apache Hadoop is key technology used to handle big data, its analytics and stream computing. Apache Hadoop is an open source software project that enables the distributed processing of large data sets across clusters of commodity servers. It can be scaled up from a single server to thousands of machines and with a very high degree of fault tolerance. Instead of relying on high-end hardware, the resiliency of these clusters comes from the software’s ability to detect and handle failures at the application layer.

Data Intensive Computing is a class of parallel computing application which uses a data parallel approach to process big data. This works based on the principle of collocation of data and programs or algorithms used to perform computation. Parallel and distributed system of inter-connected stand alone computers that work together as a single integrated computing resource is used to process / analyze big data.

According to IBM, 80 per cent of world’s data is unstructured and most businesses don’t even attempt to use this data to their advantage. Once the technologies to analyze big data reach their peak, it will become easier for companies to analyze massive datasets, identify patterns and then strategically plan their moves based on consumer requirements that identified through historic data.

Web Development

Web Development